Once you catch the automated testing itch you want to write test for everything. But should we use the same strategy for every piece of software? The conclusion that I’ve come to is no. While I’m completely committed to the practice of TDD and aggressive test coverage, I’ve found that legacy software needs to be approached strategically.

Wikipedia defines a “legacy system” as

...an old method, technology, computer system, or application program that continues to be used, typically because it still functions for the users' needs, even though newer technology or more efficient methods of performing a task are now available. http://en.wikipedia.org/wiki/Legacy_software

For this article I’ll be using a much narrower definition of this phrase. For this post “legacy software” is software that was written without automated tests.

Cement Shoes

After I drank the TDD kool-aid I wanted to write tests for everything. As the Technical Lead on a legacy system I began back-filling this application with tests. After a short amount of time I realized that I was fitting this app with a large pair of cement shoes.

As an committed agilist I was undermining my ability to respond to change by adding unit tests. Blindly applying a valuable practice was harming one of my core values. I was writing unit tests for code that may not be correct or even executed in production. This large enterprise application contained hundreds of thousands of lines of code. Spaghetti code that had not been tamed by test assertions.

Backward engineering unit test for each method was time consuming and mindless. Instead of designing tests that were expressive and reflected business requirements, I found myself creating awkward, confusing, poorly named tests. In many cases the code could not be tested without changing the very code that I was testing. As I was diligently slogging through the code I realized that I was not adding value to this application. In fact, I was pouring cement around poorly written code.

Test Types

Up until now I have been talking about unit tests. These are the most common type of automated tests but they are not the only ones. There are three types of tests: unit, integration and customer. I’m sure that someone will disagree with this narrow breakdown. Nevertheless, understanding this breakdown will help us understand how to test legacy systems. Unit tests verify a single piece of functionality in complete isolation. On the other hand, integration tests assert related functionality working together. Lastly, customer tests assert the behavior of the software from the user’s perspective.

Avoid associating these categories with tools. Unit tests are not one and the same as JUnit tests. Customer tests are not the same thing as Selenium or Cucumber. I can write any of these types of tests in JUnit or a variety of tools for that matter.

One other thing that needs to be said is that customer tests can blur between integration and unit testing boundaries. The only requirement is that a customer test focuses on user interactions. Users only see the final value, not all the individual functions that were called to calculate and format that value. On the other hand, unit tests and integration tests are mutually exclusive. Unit tests exercise a single function in isolation while integration tests exercise functions in unison.

Now that I’ve beaten a dead horse I will move on to legacy software.

Encapsulation

The problem that I encountered when I started writing unit tests for my legacy code was one of encapsulation. I was verifying the implementation at the wrong level of abstraction. Let me be clear, well written unit tests do not undermine the principles of encapsulation. However, in the case of this legacy system I was only certain that the customer interface was correct. The units of functionality within the system were producing a consistent user experience but any one piece of functionality was not necessarily correct.

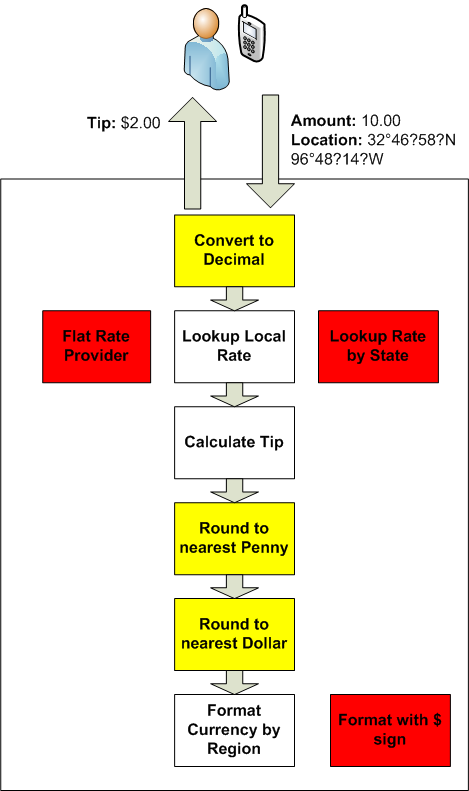

To illustrate this point lets take a look at a trivial application.

There are two primary levels of abstract encapsulation within this application. The first one is at the system level. The second is at the level of each function. Within a legacy system we must preserve the customer experience. Whether a unit test passes or fails the customer tests must continue to preserve the customer experience.

In the example above there are three yellow boxes and three red boxes. The red boxes represent functionality that is no longer called by the application. The yellow boxes represent incorrect functionality. The top yellow box converts the incoming number to a decimal. While this may have been valuable in the past now the application is already passing in a decimal value. This conversion is unnecessary and it should not be preserved with a test.

The next two red boxes contain rate look-up functionality that’s no longer used. Writing tests around this would not only be useless, but it would insure that this code was not easily removed. The next two yellow boxes have a couple of issues. First of all, rounding to the penny before you round to the dollar is unnecessary and distracting. But even more importantly, why is it looking up local rates and formatting based on the region but it rounds based on US currency. And the final red box represents dead formatting code.

While this is a tiny example it represents a significant issue. These types of issues are sprinkled throughout large legacy code bases. Blindly plugging test holes in legacy systems can be ineffective and even harmful. Pouring testing cement around the previous example will just make it harder to change. Nevertheless, you should not make changes without customer tests.

The only thing that we need to preserve in a legacy system is the user experience. So the right level of encapsulation is at the UI level. Any code can be changed, added or deleted as long as the user can send an amount and location and get an accurate tip.

Initial Focus

When you begin to write automated tests for a legacy application you need to focus on customer tests. Whether your application is web-based, desktop, batch job, or service you need to focus your attention on how the software is used. Avoid writing tests that verify how the outputs are retrieved, calculated or formatted. Just verify that the user outputs are correct based on the provided inputs.

Defects

If you’re like me, only writing customer tests seems like a bad idea. The right time to write unit and integrations tests for internal implementation is when you receive defects. Unfortunately this will significantly slow down your response time. However it will also slow down the overall hemorrhaging of the system. Defects are the right time to add internal testing because you are focusing on specific functionality and you generally have access to business resources. The best time to write a test is when you are focused on solving a problem and clearly understand the business need. In legacy software this is often when you fix defects.

Several years ago I started writing automated tests for a legacy system that I had been supporting for years. Once I committed to writing tests for defects, no matter how long it took, I quickly reaped the benefits. Within a few months I broke a test while I was fixing a new defect. After I ruled out common test failure issues, I realized that the new requirements violated an existing business rule. I went back to the system owner and they amended the defect to be consistent with the rest of the system.

How many times have I broken existing code with my insightful and timely “fixes”? Based on my defect rates before I started writing automated tests, way too often. Now it’s possible, that my incompetence exceeds that of my readers. Nevertheless, it is wise for you to guard your amazing code from the incompetent fools that you work with. Who knows, I might get the privileged to work on your code and I will certainly screw it up without the safety net of automated tests.

New Features

Another opportunity in a legacy system to write full scale automated tests in during new feature development. This is a perfect time to add new customer tests for the new features and write unit and integration tests for the internal implementations. Don’t allow laziness to push this new code into the “legacy” code bucket that doesn’t have automated tests.

Conclusions

Legacy software needs a custom testing strategy. For new systems we write automated tests while we are solving the problem at hand. We have the context and focus necessary to write relevant tests. On the other hand, testing legacy systems should be approached from the outside in. Customer interactions must be preserved through automated tests while internal implementation testing should be delayed until the appropriate time.

The right time for testing internal behavior is during defect resolution. While this will slow down your defect turnaround time, it will prevent future “fixes” from “unfixing” your application. Defects provide the focus and context necessary to write relevant internal implementation tests. This strategy is not a quick fix, but it will slowly move you from being reactive to becoming proactive with your legacy systems.